Data Pipelines, API servers, and Node.js: JavaScript On The Command Line

I’m going to take my talents to South Beach. - Lebron James, basketball star

TIME LIMIT: 3 HOURS

Taking Our Talents to The Backend

Time to get wild.

We have covered a number of front-end subjects so-far: browsers, developer tools, basic user interface principles like the box model, the three essential languages for webpages (HTML/CSS/JS), HTTP networking, Web APIs like DOM and Fetch, the Bootstrap and jQuery libraries, developer tools, and AJAX. Thus have we studied the client in the client-server model (remember: web applications follow the client-server model). Let us now step out of the browser. Let us shift our attention to the server side of web applications.

In this chapter, we will use Node.js to write three scripts we can run from the command line. The first script, parse.js, converts data from CSV-formatted files to JSON-formatted files (CSV = comma-separated values). The second script, test.js, assesses whether the first script is working properly. The third script, server.js, initiates and runs an HTTP server that exposes a JSON API endpoint at http://localhost:3000/api/reports.

Finally, we will create run-pipeline.sh, a command line script (shell script) to run these three scripts. This saves the tedious work of typing the same four commands (rm data/*, node parse.js, node test.js, node server.js) over and over manually. Automating workflow prevents errors and also helps you think about the task at hand.

But first – what is Node.js?

Node in a Nutshell

Although JavaScript is a Turing complete programming language, for the first fifteen years of JS’s life, web browsers were the only place you could use it. This was initially just fine. Successive versions and implementations made the JavaScript language more pleasant to write. JavaScript had indisputably won as the language of the web. Every browser implemented JS. If you program for the client side of the web, the dominant consumer software platform of our day, you know JavaScript.

But there was (at least) one problem. And that problem came with several costs.

The Problem: For many years, if you were a full-stack programmer (a programmer who programmed on both the client and server sides), you had to program in multiple languages. While you had to use JavaScript in the browser, you could not use it on the “server-side” because there was no runtime environment there. This is all a fancy way of saying that you could not run files with a .js extension from the command line. For the server-side of web apps, you had to program in another language like Python, Ruby, PHP, or Perl.

There were some good things about this two-languages split. Coding in two programming languages is definitely good for any programmer. It makes one more malleable and systematic in one’s approaches to problems. Unfortunately, these long-term skills were outweighed by at least two costs of the two-languages-for-web-application deal.

One cost was a human labor market cost: Many people and companies were trying to figure out how to hire more full-stack engineers. The supply of full-stack engineers was was limited by the two-languages requirement. Creating “a unified language of the web” is one avenue through which you can lower the entry cost for someone to become a full-stack engineer and thus create more of them.

Another cost was more personal and cognitive – context switching. Different programming languages have different syntaxes and peculiarities. They lend themselves to different styles of coding. If you were a full-stack engineer working on a feature by yourself, you might be writing JavaScript one hour and Java, Perl, Ruby, Python or PHP the next. Switching contexts in this manner serves as an interruption to your working brain. It reduces your programming productivity (in the short-term, at least).

The Solution: In 2009, a programmer named Ryan Dahl created Node.js, an “open-source, cross-platform, JavaScript runtime environment”, i.e. JavaScript on the backend/command line.

All of this means that:

- You can now run JavaScript files from your command line.

- Because of #1, JavaScript can now do a lot of non-Internet programming, like process data, create files on your operating system, and talk to databases.

- Because of #1, JavaScript can now do more Internet programming. JavaScript can now be used to run backend applications that talk to frontend applications over HTTP.

Many third-party libraries have been created for the Node.js ecosystem. In this chapter however, we will just use the official Node.js built-in API. Specifically, we will be using its File System, HTTP, and Assertion Testing APIs.

In order to do this yourself, you will need to install the Node.js runtime environment on your local machine. Because you are likely to install many command line tools during your coding journey, we will do a quick dive into some of the internal workings of how executable commands like node are installed, found, and run by the shell.

Installing the node executable: A Short Journey Through the Command Line Environment

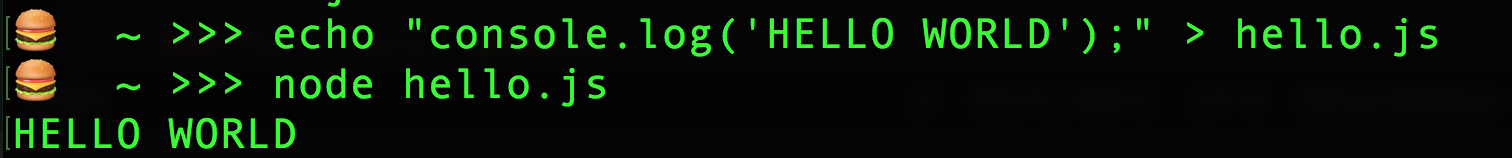

Go to the NodeJS website (nodejs.org) and download and install node on your computer. This chapter was written with version 8.11.0. When your installation is complete, open up your Terminal application and type:

which node

which npm

If you have successfully installed Node.js, the command line should print /usr/local/bin/node and /usr/local/bin/npm. Congratulations! You now have the node and npm (Node Package Manager) commands available on the command line. If you enter node and hit enter, you will be dropped into an interactive node environment you can play with. Type .exit and hit ENTER to exit that environment.

It is worth briefly reflecting on the fact that the executable files behind the node and npm live in the /usr/local/bin directory.

/usr/local/bin - A Place For Your Executables

All UNIX systems (Linux and Mac) come with a built-in /usr/local directory. This directory is initially empty. It is a place for system administrators (you!) to put software that didn’t come built into the system. Node.js is not always bundled with a system distribution, so a manual install like the one you just did will put the Node software’s files in /usr/local or, to be more precise, subdirectories of /usr/local.

Some software files, like the the /usr/local/bin/node file itself, is executable. It is meant to be run. Such files are also called binary files because they often do not contain human-readable text. See the bin in binary? This is why the newly installed commands were put into /usr/local/bin subdirectory.

Let’s examine the node executable

cd /usr/local/bin

ls -l node

The printed result:

-rwxr-xr-x 1 root wheel 35521248 Sep 10 11:36 node

As you may recall, running ls with the -l option details the files in long format. For every matching file, the shell prints a line of data, with categories of data delimited by spaces. The first space-delimited category of data is about file permissions, and here it is -rwxr-xr-x. In bash, this means “node is a regular file that is readable, writable, and executable by the file’s owner. The file may only be read and executed by everybody else.” This is a common permissions setting for sysadmin-installed executables.

To gain a little more experience:

file node ## prints "node: Mach-O 64-bit executable x86_64".

So we know that /usr/local/bin is a special directory for executable files, but how does the command shell know?

We will explain by exposition: Go run the gibberish command dgaf in the Terminal. Your terminal will say -bash: dgaf: command not found. Why? The fake command dgaf could not be found because there is no executable file named dgaf in any of the directories found on the system’s PATH.

The PATH Environmental Variable: Configuring The Shell To Find Executables

As we have discussed, the command line, or shell, is your window into the operating system. It is how you talk to your operating system. Up to this point, we have mostly considered the situation just from our own perspective. Let’s consider things from the operating system’s perspective.

When you run a command like node file.js, the shell does not initially know what the node command does. It knows you want to execute a command, though, so it looks for executables. For this purpose, it accesses an environmental variable, which is basically a regular old variable that is stored in a shell session. They are very easy to set and call:

export AUTHOR="Kevin Moore"

echo $AUTHOR ## prints "Kevin Moore"

The shell looks for your node executable using a very special environmental variable called PATH

echo $PATH

This should print something like

/home/me/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/usr/games

which is a colon-delimited list of directories that the shell will look for your executables in when you try to call one. You can edit your PATH in a configuration file located at ~/.bash_profile. If you try to execute a command that you haven’t installed in a directory that is not in the PATH, bash will complain command not found. It is not uncommon for programmers to spend a great deal of time configuring their local machines with dotfiles like .bash_profile. These files are called dotfiles because they begin with a period. That period tells the operating system that the file should generally not show up in graphical file finder software. Dotfiles are thus hidden from regular users - for programmers only! Regular users should not be messing with their PATH.

Luckily for us, we are programmers. We will now start using Node.js to do real work.

Assignment: Building a JSON Data Pipeline and API Server

Scene: You’re on your third week at CityBucket. That Dynamic Report Generator is looking good. You’re ready to test that motherfucker with some live data! If only the Backend Engineer would get their shit together.

The door opens. The CEO and Lead Engineer enter. The CEO looks haggard. The Lead Engineer appears annoyed.

You: Hey hey heyyyy. How’s everybody doing this sunny Thursday morning?

CEO: Great. Anything I can get you? Are you comfortable?

You: I’m goo-ood…

CEO: Look, Backend Engineer quit this morning…

You: Hmm. Unfortunate.

CEO: …and we need you to write backend code.

Lead Engineer: Are you familiar with node?

You: Oh, I dabble in the backend arts.

CEO: Good enough for me. Here’s the score: We’re going to deliver this report generator project, right? So we have all of the customers giving us data and we generate reports for them… well, the backend isn’t up and running yet…

Lead Engineer: We need you to build a script that converts data from CSV to JSON. In about a week, we are going to have clients start sending us CSV files with data that they want put into these webpage reports. Here’s a sample, education.csv, of what their data will look like:

total students,1337

high school graduation rate,84%

median teacher pay,$38030

Lead Engineer: We are putting out a specification to our clients that we will accept data in this format. Each line of the file represents a label-value item of report data. Each line can only have one comma. The data before the comma will be a “label”, and the data after the comma will be the “value”.

Lead Engineer: We need to turn this CSV file into a JSON file that will look like this:

[

{

"label": "total students",

"value": "1337"

},

{

"label": "high school graduation rate",

"value": "84%"

},

{

"label": "median teacher pay",

"value": "$38030"

}

]

You: So the CSV is the input, and the JSON is the output. Yeah, I can write a Node script that does this. We can call it parse.js.

Lead Engineer: Perfect. Don’t use any third-party packages just yet. I want you to go on a research spike with this one. Feel out the problem area. Just make it work with the fake CSV file above first. We can evaluate refactoring or rewriting it later. For now, build it using Node’s built-in File System API.

You: Got it - use the fs module. What about testing?

Lead Engineer: Great idea. Making a testing.js script. Make a couple of assertion statements. Use Node’s built-in Assertion Testing module to make sure that education.json file looks the way we think it should.

You: Got it - use the assert API.

Lead Engineer: Perfect. Test the JSON file, too – make sure your data is coming out the way you want it.

Lead Engineer: Let’s talk about how to structure the code. Your eventual file structure should look something like this.

You: OK, so two top-level files - scripts and data. And a root-level run-pipeline.sh file so we can run them all at once.

Lead Engineer: Exactly. I want to keep the data totally separate from the code. I’ll break it down a little more:

Lead Engineer: The data folder has two sub-folders, raw and processed. What our parse.js file needs read CSV files from the raw folder, convert it to JSON, then write it to JSON files in the processed folder.

You: Do we care about overwriting files in /data/processed?

Lead Engineer: No.

Lead Engineer: OK, then I broke all of our scripts out into their own scripts folder. These may eventually become their own services with their own code repositories one day, but for today, let’s just keep them together in the scripts folder. At the end of this project

You: What is that /scripts/server.js file?

Lead Engineer: I thought you’d never ask. Implement a super-simple HTTP server. You only need to serve one API endpoint, make it at the path /api/reports. For today, all it needs to serve is the education.json file that your parsing script created.

You: Got it. Make a super-simple JSON API. I will use node’s HTTP module.

Lead Engineer: Perfect. Here’s what I expect: I want to be able to clone the repository. There should be nothing in the data/processed folder, but education.json in the data/raw folder. I want to be able to run sh run-pipeline.sh from the root folder and have that script 1) run the parsing script that creates the JSON file, 2) a testing script run, and then 3) the server starts.

For simplicity’s sake, here is the run-pipeline.sh script:

rm -rf data/processed/*

cd scripts

echo "1. Creating new JSON."

node parse.js

# 3. Test the files in data/processed

echo "2. Testing."

node test.js

# 4. Run server

echo "3. Now Running new server.

- See API at http://localhost:3000/api/reports

- Control-c to end this process.

- Command-t for new Terminal tab."

node server.js

And here is the README.md for the repo:

# Data Processing Backend README

This repository represents the backend work for our data reports.

You can run all of the code at once by running the command

`sh run-pipeline.js` from the directory root.

Requirements: bash shell, Node.js v8.11.0

## Data Processing

The `parse.js` script takes CSV files from the `data/raw` folder and

converts their data to JSON files.

The `test.js` script makes sure the data processing occurred correctly.

## Server/API

The `server.js` script instantiates an HTTP server with an

`/api/reports` endpoint.

You: Thanks! Working on the documentation with me before coding helps me understand the requirements of the system.

Lead Engineer: Yep, that’s why we do it. Anyways, name this repository data-processing-backend.

CEO: Do you think you can have this done by close of business tomorrow?

Make a plan

When you’re thinking about a complex software problem, the best place to start is by breaking the overall problem into smaller component’s that you can then solve.

Let’s think of each script as a component. You have to create parse.js to convert data from a CSV file to a JSON file. You have to create test.js to test that file (easy enough). These two tasks are pretty related. Unrelated to those is the creation of an API - server.js. At the top of all of this is a convenience shell script, run-pipeline.sh.

When identifying these components and how they work together, it is often helpful to diagram the process. Visualize your system, its components, and how they interact with one another.

Here, we make a top-level distinction between the frontend and backend – they will communicate by HTTP. Other than for ultimately testing that our API endpoint, http://localhost:3000/api/reports works, we need not worry about the client.

The order of operations (parsing, testing, serving) in the run-pipeline.sh makes sense insofar as the data flows of this server-side system works, so we will tackle the individual script components in that order, one at a time.

Write The code

Setup and Dummy Data

Create a git repository named data-processing-backend and then clone it to your desktop.

cd ~/Desktop

git clone https://github.com/<zero-to-code>/data-processing-backend

cd data-processing-backend

Now we will make all of the files and folders we discussed with the Lead Engineer, with the exception of education.json – the is the file that parse.js will programmatically create.

At the root level:

touch run-pipeline.sh README.md

mkdir scripts data

Go ahead and copy the shell script and README from the dialogue above.

Now lets make the JavaScript scripts:

cd scripts

touch parse.js test.js server.js

Now the data folders and file:

cd ../data

mkdir raw processed

cd raw

touch education.csv

Add the CSV data as you see it in the dialogue above. You may get unexpected results if you don’t copy it exactly.

We’re ready to get started. Save your set-up work:

git add .

git commit -m "Initial file setup + creation. Added dummy data in education.csv, copied README and run-pipeline.sh."

The i/o work: parse.js

Reading existing files (like education.csv) and creating new file (like education.json) is incredibly easy with Node’s fs module. As you probably guessed, the FS stands for file system. You can read a file into your program as a string with the fs.readFileSync method – all it needs is path to the file and the character encoding (which will usually be ‘utf-8’).

const fileString = fs.readFileSync('path/to/file.csv','utf-8');

The real bulk of the work is the parsing - creating the logic for turning comma-separated values into an array of JSON objects. We want to turn each row of the CSV file into a JSON object. Each row represents a report item object. Each report item object has a label and value, separated by a comma. The text data before the comma is a label. The text data after the comma is a value.

Our parsing logic will parse the CSV for these objects and convert them to JavaScript objects. Once we encapsulate each row’s information inside of an object, we will aggregate them in an array.

Here is how our logic, at a more granular level:

- Get the data as a string: Read in

data/raw/education.csvfile withfs.fswill turn it into a JavaScript string we can bind to a variable. - Break the string into an array of rows: Each newline is marked by an

\ncharacter in the document. Using the String data type’s built-insplitmethod, split the giant string into an array. Use newlines as the delimiter. The end result of this will be an array of strings, where each string is a row of CSV data. - Get the label and value from each row: Iterate over all of the rows, splitting each row into it’s own array, using the comma as a delimiter. Now you have your

labelandvaluevalues. - Restructure data: Turn them into a JavaScript object.

- Aggregate restructured data: As you iterate over the rows, aggregate all these new objects in an array.

- Serialize the data: When iteration is done,

JSON.stringifythe array. - Write to education.json: Use the

fsmodule to write this array to a file located atdata/processed/education.json.

Here’s the code:

// By convention, module imports go at the top of a file.

const fs = require('fs');

let allItems = []

const csvFile = fs.readFileSync('../data/raw/education.csv','utf-8');

const csvRows = csvFile.split("\n");

// The JS data type has a built-in forEach method.

// Want a challenge, rewrite this code using the built-in map method.

csvRows.forEach(createReportItem);

function createReportItem(csvRow) {

const keyValue = csvRow.split(",");

// make sure you only have a key and value

if (keyValue.length === 2) {

const reportItem = { label: keyValue[0], value: keyValue[1]};

allItems.push(reportItem);

}

}

// Serialize and save

const reportJSON = JSON.stringify(allItems)

fs.writeFileSync('../data/processed/education.json', reportJSON)

Test, Iterate, Commit

Now try running this. From the scripts directory:

node parse.js

If this script is working correctly, then you should have created a filed named education.json in the /data/processed directory. Run it a few times. The script should replace the old file with a new one each time.

git add parse.js

git commit -m "parse.js script translating education.csv sample to education.json"

Quality Assurance: test.js

Testing is a broad and important subject. There are many different ways to test software. Many teams have “Quality Assurance engineers” or “testers”, whose entire jobs revolve around making sure software does what it is supposed to do. These people, however, are rarely programmers themselves. As such, it is your job to pick up your part of the quality assurance slack by putting tests in your code.

Use tests to make sure your code does what you think it supposed to do. Testing has the added benefit of making you think more about your code. This is pretty much always going to result in better, more robust, less bug-prone code. Some people believe this so strongly that that follow a practice known as TDD, or Test-Driven Development. In TDD, coders write their tests before they write the code they’re testing. All of their tests fail, then they write code that makes the tests pass. Since we already wrote some code, though, we are not following TDD.

The Lead Engineer also told you to perform some assertion tests on the education.json file. Assertion testing is exactly what it sounds like – you make an assertion and then test whether it is true. What are some things we can test about the education.json file we generated? We want to test whether the file we generated is the same structure as the education.json file the Lead Engineer gave us. That’s how we know we’re up to spec.

Let’s make some observation about the Lead Engineer’s sample JSON:

- The top-level data object is an array, which has a type of “object”

- The array has 3 member objects

- Each member object has a label and a value.

Let’s test whether we can assert the same things about our generated JSON file! To do this, we will:

- Import the

fsandassertmodules. - Import the

data/processed/education.jsonfile. - Use

JSON.parseto convert the file to a JS object. -

Run assertion tests on this JS object to see if it matches the observations we made above.

const assert = require(‘assert’); const fs = require(‘fs’);

const report = fs.readFileSync(‘../data/processed/education.json’, ‘utf-8’); const reportData = JSON.parse(report);

assert(typeof reportData === “object”, “The typeof JSON should be ‘object’.”); assert(reportData.length === 3, “FAILED: The array should have three members.”);

for (let i = 0; i < reportData.length; i++){ const reportItem = reportData[i]; assert(reportItem.label !== undefined, “FAILED: Report item missing label.”); assert(reportItem.value !== undefined, “FAILED: Report item missing value”); }

console.log(“TESTING COMPLETE.”);

As you can see, the assert function takes two arguments. The first argument is the test. The test takes the form of any JavaScript expression that will evaluate to true or false. The second argument is an error message, which will be console.logged by the library if the first argument evaluates to false.

Test, Iterate, Commit

Run your Node script:

node test.js

If all of your tests pass, you will only see the “TESTING COMPLETE.” message. I recommend monkeying with the tests and making them fail, so you can see what it looks like. Try adding some tests of your own. When you’re done, save, commit, and push. You’ve done too much work to just save it all on your local machine at this point! From the root of the repo:

git status

git add scripts/test.js

git status

git commit -m "Assertion tests for education.json."

A web server with a data API: server.js

Now that all of the data is parsed into JSON, we are ready to share that JSON with the world. It’s time for you to create your first web server. A web-server is an application that runs from a computer’s command line (a process) and listens for incoming HTTP requests on a specific port. After processing the request, the server returns a response object.

Here we will listen for HTTP requests at port 3000, aka http://localhost:3000. If any client applications requests the /api/reports resource, we will return our education JSON. If they request any other path, we will send them a 404 error message (404 is the HTTP status code for Resource Not Found). Remember: HTTP messages are just meta-information (headers) and data (the body).

You start this process with node server.js. Once started, the process will run continuously in your terminal until you kill it, by either hitting control-c, closing the Terminal window, or otherwise finding a way to make the program crash. While running, you should be able to navigate to http://localhost:3000/api/report and receive the JSON. If you navigate to any other path, you will see the 404 error message.

At a more granular level, here’s our coding plan:

- Import the

httpandfsmodules. - Create the HTTP server.

- The HTTP server will use a “request handler” callback function.

- The callback function checks which path a client has requested.

- If the path is

/api/reports, attach appropriate headers for success and JSON data. Usefsto get the JSON. Send Response with JSON as HTTP body. - If path is anything else, send 404 headers and error message body.

This is how the code looks:

const http = require('http');

const fs = require('fs');

const server = http.createServer(handler);

function handler(request, response) {

if (request.url === "/api/reports") {

response.writeHead(200, {'Content-Type': 'application/json'});

const json = fs.readFileSync('../data/processed/education.json', 'utf8');

response.end(json);

} else {

response.writeHead(404, {'Content-Type': 'text/plain'});

response.end("Error: Requested resource not found.");

}

}

server.listen(3000, 'localhost');

Test, Iterate, Commit

Run this server with node server.js. You can then open another tab in your Terminal window with command-t and then, in that new window, curl http://localhost:3000/api/reports. This should show you the JSON. Requesting any other path at localhost:3000 should show you the error JSON. If you run curl with the --verbose option, you can also see all the HTTP headers.

From the root level of the repository, save, commit, and push:

git status

git add scripts/server.js

git commit -m "Initial web server. Can be launched with `node scripts/server.js` or with `sh run-pipeline.sh`. Creates API endpoint at http://localhost:3000/api/reports."

Run the pipeline like a boss: run-pipeline.sh

You should now be able to run the shell script that runs all of your Node scripts: sh run-pipeline.sh. Congratulations!

Recap

As Zero To Code was being written, it was announced that the Node Foundation and JavaScript Foundation were merging. JavaScript’s future is now tied to Node’s.

You have now written three Node scripts - for data processing, for testing, and for an web server process. You learned how to import Node.js’s core modules with require and how to run JavaScript on the command line.

This is all backend work. By virtue of learning how to run servers that clients can talk to, you are beginning to become a back-end engineer, in addition to being a front-end one. At a minimum, you probably have a much greater appreciation for the client-server model. This is the stuff of which full-stack engineers are made.

In the next chapter, we will explain how to take your Node code and workflows to the next level with the Node Package Manager.

Exercises

- Refactor the

parse.jsfile to use the JavaScript Array data type’s built-inmapmethod instead offorEach. Map performs similarly toforEach- it passes each member of an array to a callback function. UnlikeforEach, though,mapautomatically aggregates the return values of the function calls in an array and returns that array at the end. When we usedforEach, we had to manually aggregate our data in an array we manually created.mapcan be used to make our code more elegant.

Chapter 5, “Higher-Order Function”, of Eloquent JavaScript discusses these functional programming topics in greater detail.

-

Add 5 more assert tests to

test.js. -

Add another API endpoint,

/hi-there, toserver.js. This endpoint should return a JSON object –{"hello": "there"}. -

Spend 5-10 minute familiarizing yourself with the official Node.js website, located at nodejs.org. Locate the API documentation for Node.js version 8.11.0. Find the File System, HTTP, and Assert module documentations.

-

Skim Chapter 12 of the Linux Book - The Environment.

-

We talked a bit about how programmers configure their shell environments by setting environment variables and/or using dotfiles, e.g. setting the

PATHvariable in an~/.bash_profile. Using env variables for configuration is a best practice.

Read the Introduction and first three sections (at least) of “12 Factor App”, a manifesto for building robust web applications. https://12factor.net It’s not a bad idea to re-read this on a regular basis for the next year or three.

- If you need to edit your own

~/.bash_profileat some point, I recommend doing so with the Vim text editor that comes built into the bash shell. The bash shell comes with a tutorial built-in that you can see by running the commandvimtutor.